As a society, we love social media – it’s the perfect way to fill a dull moment. But we’re becoming increasingly uncomfortable about the lack of emotional safety. Social media can be a cruel playground. The anonymity and ease-of-use allow impulsive emotional outbursts, as well as targeted attacks. Harassment, bullying and intimidation cause serious harm – often aimed at people in vulnerable groups, or in public life.

In the online world the sheer volume of information makes it harder to police and enforce abusive behaviour

David Lopez’s work offers a potential new line of defence. This could make our online communities feel more inclusive, safer spaces for everyone. David and his team are developing ‘LOLA’. She’s a sophisticated artificial intelligence, capable of picking up subtle nuances in language. This means she can detect emotional undertones, such as anger, fear, joy, love, optimism, pessimism or trust.

LOLA takes advantage of recent advances in natural language processing. Not only that, she also incorporates behavioural theory to infer stigma, giving her up to 98% accuracy. And she continues to learn and improve with each conversation she analyses.

Unlike human moderators, LOLA can analyse 25,000 texts per minute. This allows her to swiftly detect stigmatising behaviour. Thus, she highlights cyberbullying, hatred, Islamophobia and more.

We believe solutions to address online harms will combine human agency with AI-powered technologies that would greatly expand the ability to monitor and police the digital world.

In a recent use-case on fake news about Covid-19, LOLA found that this misinformation has strong components of fear and anger. This tells us that fear and anger are helping disseminate the fake news. Predictably, emotive language motivates people to pass on information, before giving it a second thought. For LOLA, it is straightforward to pinpoint the originators of the misinformation. In this way, we can reduce online harms.

In another use-case, LOLA could pinpoint the originators or cyberbullying against Greta Thurnberg. LOLA grades each tweet with a severity score, and sequences them: ‘most likely to cause harm’ to ‘least likely’. Those at the top are the tweets which score highest in toxicity, obscenity and insult.

This kind of analysis is a valuable tool for cybersecurity services, at a time when social media companies are under increasing pressure to tackle online harms. The Government is in the process of passing regulations on online harms which gives digital platforms a ‘duty of care’ for their users.

LOLA’s impact is already being recognised:

– She has been included in developing several research papers, for Business Ethics Quarterly, Information Systems Journal and Organization Science.

– David and his team are collaborating with the Spanish government and with Google.

– ‘CyberBlue’ (LOLA’s industry-oriented face), is on the IE GovTech List – the top 100 startups delivering solutions to government ́s toughest challenges, in Spain and Latin America.

Read an English language sample of the IE GovTech list (PDF).

See LOLA and CyberBlue mentioned in the third-largest newspaper in Spain, El Diario Castilla y León.

Read more about David’s work: Mitigating Online Harms at speed and scale (PDF)

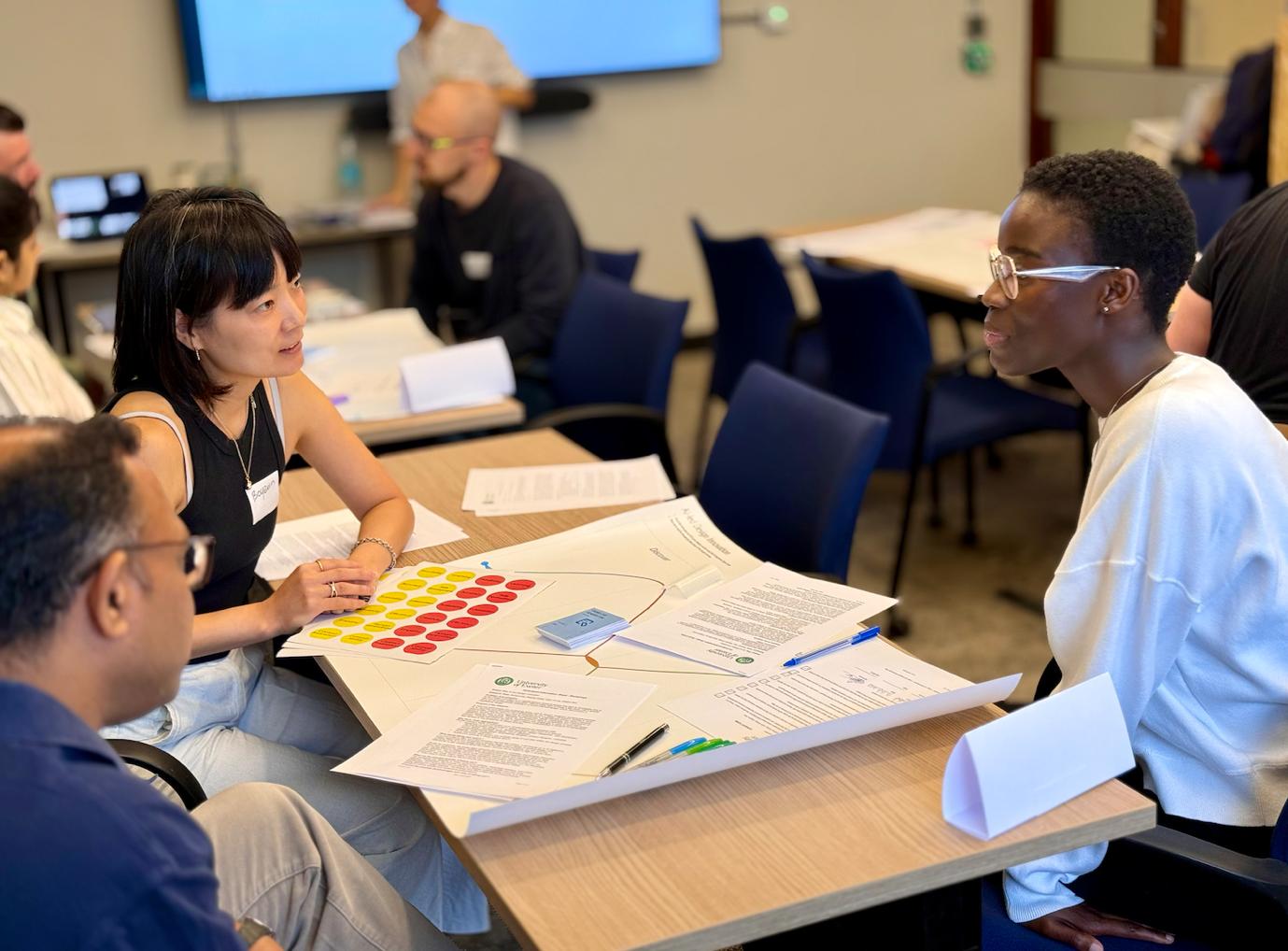

Photo by Becca Tapert on Unsplash